The creative space has been heating up in recent weeks again with Adobe “FireFly” project and Microsoft “Designer”. Putting aside the craze that was algorithmic generative images that were tied to blockchains, eg NFTs, DALLE and DALLE2 arguably started the renaissance that is currently generative-AI-based image creation. Services like MidJourney then taking the fidelity of those kind of outputs to further heights and creativity, thinking about the output from the lens of rendering engines like Unreal and Unity style of outputs. AI generative images seem to be here to stay, and more and more computing power is being thrown into them. For instance, nVidia and Adobe have partnered together, and we already know Microsoft is a backer of OpenAI. Although, discussion are ongoing as to the ethical, and in some cases legal, concerns as to the sources of the images that were used to train the machine learning model to generate these ‘new’ generative images.

We have also seen things like the rise of AI-generated voice coming out of places like ElevenLabs, where users can train a model against their own voice, to have an AI speak as they would. All of this, albeit cool, is on the generative-creative side of things, but what about the automation side of AI. Where can AI help augment the interfacing of tasks for users?

Adobe Podcast (beta)

As a podcaster, I’ve been on the mic and in the podcast sphere since 2010. It is cool to see where podcasting has intersected with teaching and learning a lot more in recent years. As a technologist, and now instructional designer, over the years, I’ve seen very creative students (and faculty) jump into podcasting, only to get put off by technology and editing. As an AEL (Adobe Educational Leader)I tried out the new Adobe Podcast beta and can see how Adobe’s AI transcription-based editing interface (as opposed to a traditional waveform editor) could in the future help bring down the technology-skills barrier to production, and potentially open up podcasting (and digital storytelling) to more students and faculty.

Here is a sample using Adobe Podcast beta and hosting it on our Panopto.

Using the interface is really THAT easy, once the transcript processes. The

Easy click to delete a word from the transcript | edits the audio of the podcast

As I’ve previously mentioned in a post, Adobe’s choice of UI is very reminiscent to Anchor.fm

The transcript downloads along with the completed MP3 file, so in theory this brings a whole new level of accessibility to podcasts as ‘corrected’ transcripts are much more easily available after production.

Error mentioned in the demo episode

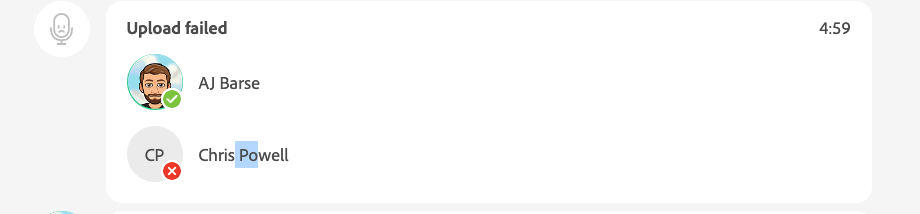

I do hope recording and remote recording gets better. When recording with a guest, because it is similar to a transcript, did is difficult to rearrange lines of speech. Where a podcaster might split up an interview and rearrange the chronology to best fit a show, in the current interface it isn’t easily possible. Also, back up audio recording needs to be integrated. As I mentioned in the show, a major part of Chris’ audio never completed upload; and as a podcaster that is a deal breaker. Now if there was a temporary file generated on that guest’s machine that would be a suitable backup that could then later be uploaded. But that would mean Adobe Podcast would need to have a way to then resync the timing of the conversation to be woven between the host and guest. Again, a feature I don’t currently see.

You must be logged in to post a comment.