Last week we talked about a primitive cornerstone in the connectionist paradigm, Frank Rosenblatt’s Perceptron. Despite it’s similarity to modern, more successful connectionist models, its lack of expressive ability ultimately led to its downfall. After Marvin Minsky, a prominent leader in the AI community at the time, personally decried the machine for being unable to model the simple exclusive-or (XOR) function, connectionist models rapidly fell out of fashion. The field returned to the top-down approach, using rule-based languages such as Prolog and LISP to create so-called “expert systems”: programs that could reason through relatively complex problems by incorporating domain-specific knowledge with the relational reasoning that is ubiquitous in rule-based programming. Essentially, the field of artificial intelligence had returned to if-else statements and glorified flowcharts.

Figure 1: Architecture of an “expert system”

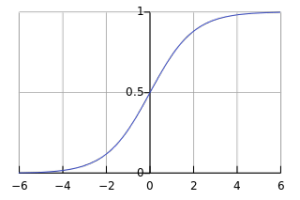

There were two major breakthroughs that brought connectionist AI back from the grave: the McCulloch–Pitts (MCP) neuron and the addition of a hidden layer. Together, these mechanics form the skeleton for what we now refer to as a neural network. The hidden layer is simply an intermediate stage between the input and output of a neural network that allows the model to implicitly map the input data into high-dimensional space and create a more abstract representation of the features. Working in a higher dimension means that the feature-space of the hidden layer is far richer, meaning that the network will have a far greater expressive power. The MCP neuron is an architecture vaguely based on the structure of neurons in the brain. In the brain, networks of neurons interact in a binary way: they either fire or they don’t fire. In the MCP artificial neuron, “firing” is done with an activation function. These functions are generally sigmoidal, and squish the output of a hidden layer.

Figure 2: Example of a sigmoidal function (logistic sigmoid)

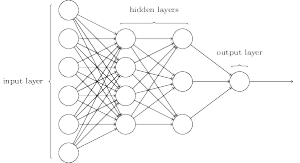

Mathematically, these activation functions introduce non-linearity into the system, allowing neural networks to generate complex, non-linear decision boundaries (as we saw in last week’s post). The activation shown above isn’t completely binary, but it does force values to fall between 1 and 0. There are other types of activation functions, and depending on the architecture of the neural network, a vanilla logistic sigmoid may not be the best choice. We’ll cover types of activation functions when we talk about Deep Learning. So with the addition of hidden layers, we end up with an architecture similar to this:

Figure 3: A simple neural network

The edges represent multiplication by a weight matrix and the addition of a bias vector. After each hidden layer, an activation function is applied to the elements of the hidden vector. In the above example, the output is a scalar.

So how does such a complicated model actually learn? The secret is in the type of operations that compose the network– every single one is differentiable. For a model that is just composed of an input and output layer, optimizing the weights is a simple process: we calculate the derivative of the entire model given its output error to produce a gradient. The gradient is a vector that represents the direction of steepest ascent for a function. So if we add the negative of the gradient to our model’s weights, the entire network will approach a more optimal configuration. Imagine a giant, high-dimensional bowl that represents how bad our model is, and a ball at the top of the curve is our model’s initial configuration. We want to make our model, so we want to minimize it’s error. This is analogous to the ball rolling down the sides of the bowl until it reaches it’s optimal location at the lowest point in the center. This algorithm is called gradient descent, and is the source of a neural network’s ability to learn. Next week, we’ll discuss the genesis of deep learning, and how gradient descent works with deeper models.

Source:

http://www.igcseict.info/theory/7_2/expert/files/stacks_image_5738.png

https://upload.wikimedia.org/wikipedia/commons/thumb/8/88/Logistic-curve.svg/320px-Logistic-curve.svg.png

That’s fascinating how neural networks work and facilitate learning. The most difficult part for me is writing, so I prefer to use this resource https://artscolumbia.org/free-essays/wuthering-heights/ when I need to complete a perfect paper. I think that using such a great examples will help to improve the academic performance.

If you’re looking for professional and reliable resume service in Phoenix, try here resumeservicesphoenix.com. With certified writers and a proven track record, this company is well-equipped to help you create a top-quality resume that showcases your skills and sets you apart from other job seekers

Hey. Creating an outline is a great idea. Not only will it help you organize your thoughts, it will serve as a template when it comes time to write. The best way to write a pay to write paper writepaper.com/ your topic and follow instructions. If your instructor has unclear instructions, ask him or her for clarification. A professional writer is the best way to ensure that your assignment meets the requirements and is written to a high standard.

Studying AI, I understand its significance in education. I believe students should nurture their unique abilities and writing skills. Purchasing essays from real writers frombuyessayfriend.com allows students to develop creativity and analytical skills, obtaining original and unique papers.

Five Nights at Freddy’s has become an icon in the horror game genre, attracting millions of players worldwide.

What a great article!

wedding photographer dublin

Absolutely Fantastic Article! Congrats!

Virginia Park Lodge Wedding

Fortinet exam dumps typically refer to unauthorized materials containing questions and answers purportedly from Fortinet certification exams. These dumps are often marketed as shortcuts for individuals seeking to pass Fortinet certification exams without proper study or understanding of the subject matter. However, it’s important to note that using Fortinet Exam dumps is considered unethical and can lead to serious consequences, including the revocation of certifications and damage to one’s professional reputation.